1. Terrain Ecology & Procedural Pipeline (June)

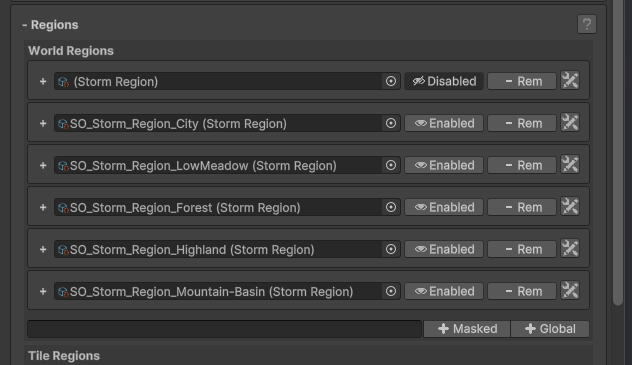

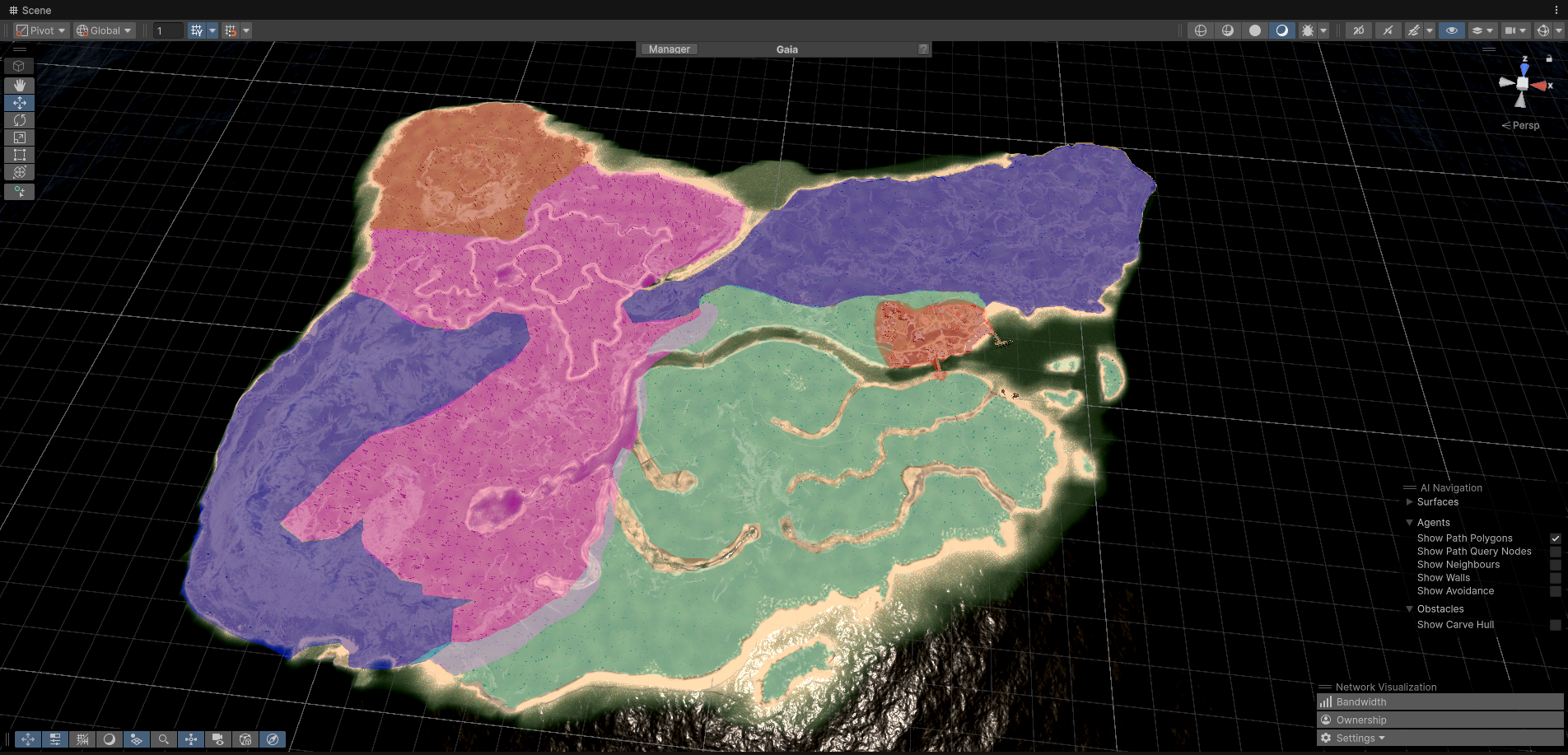

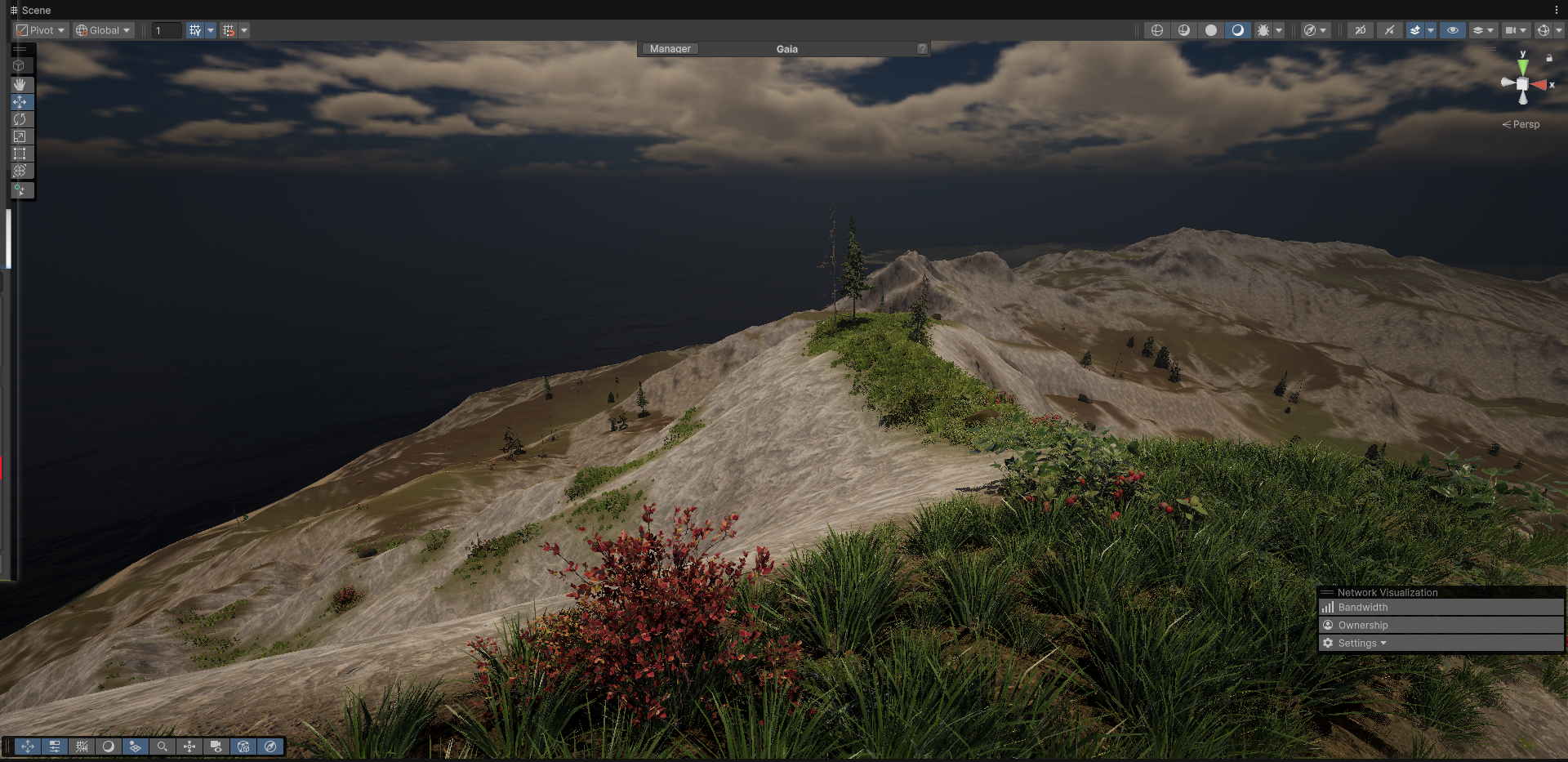

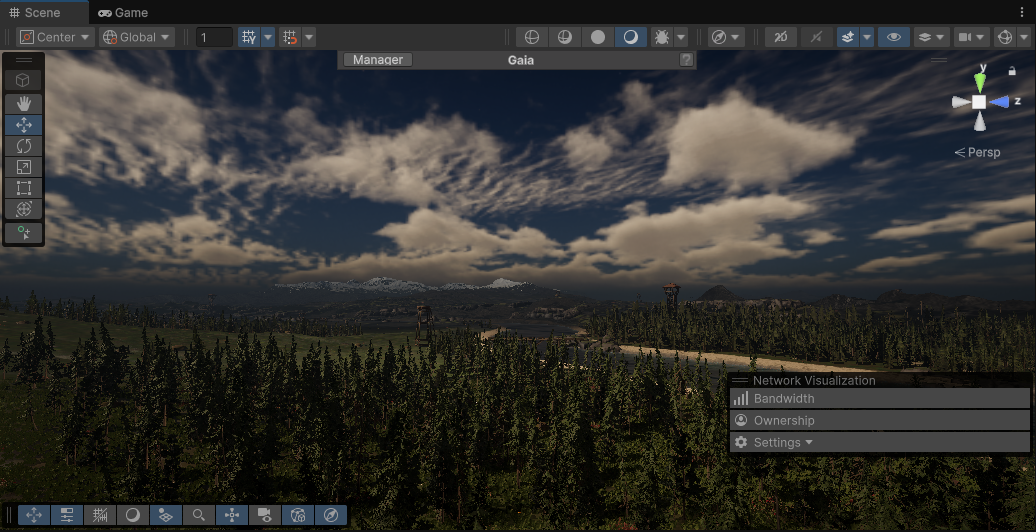

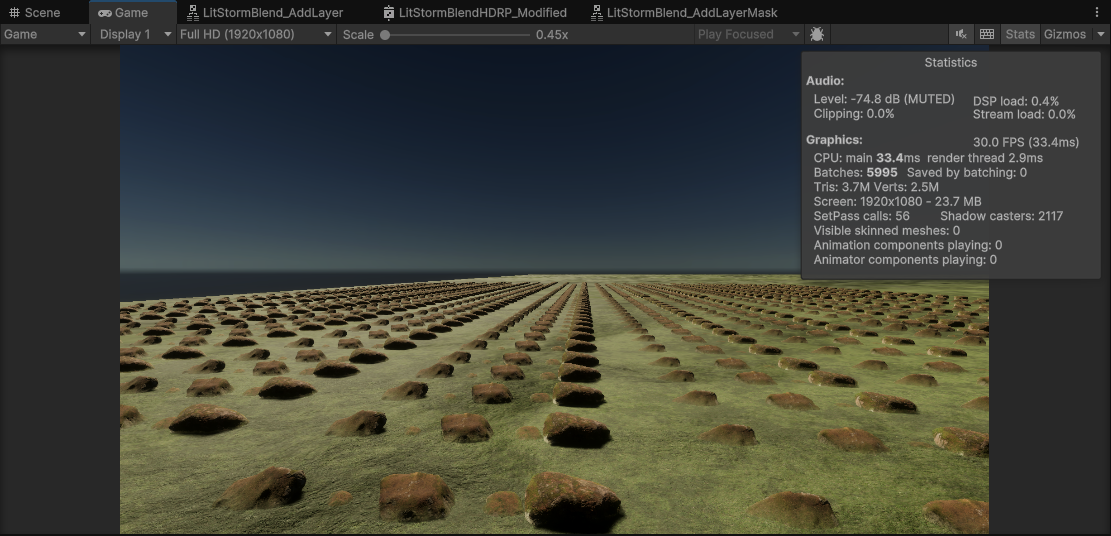

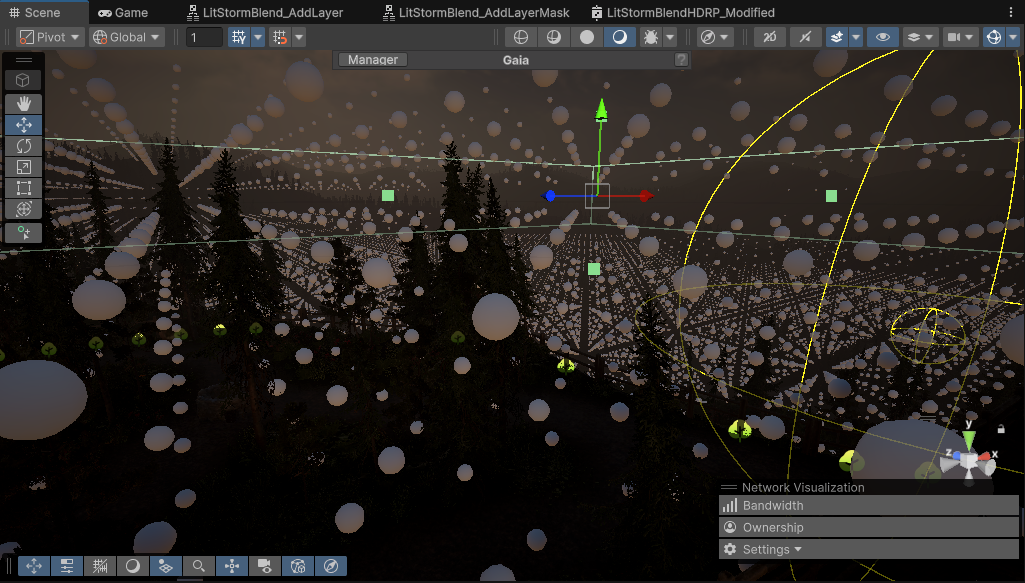

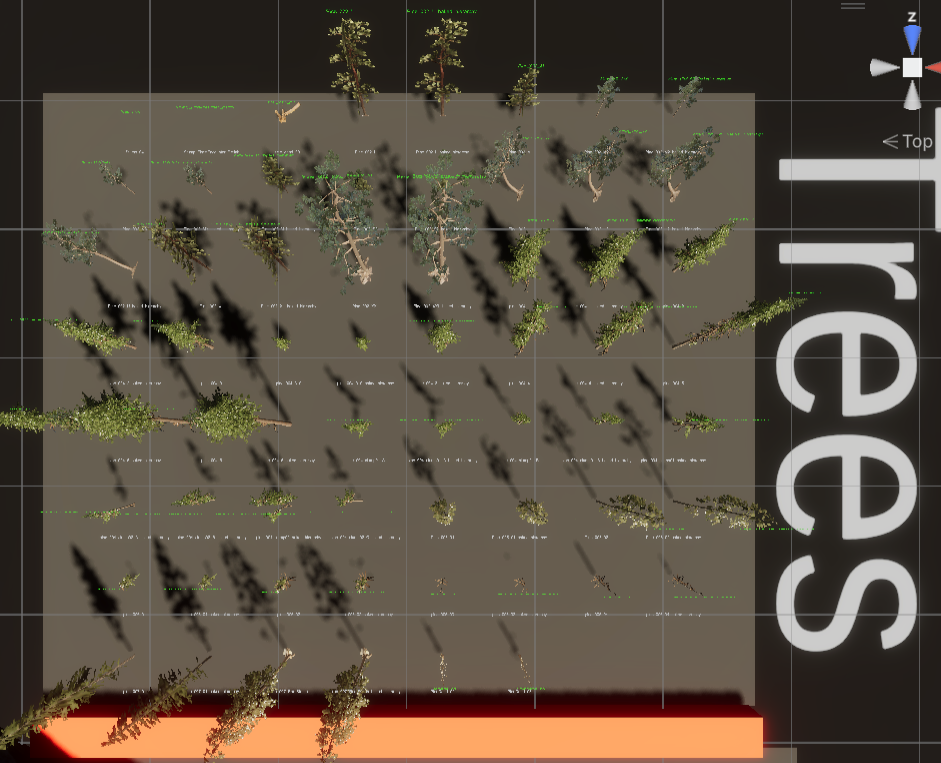

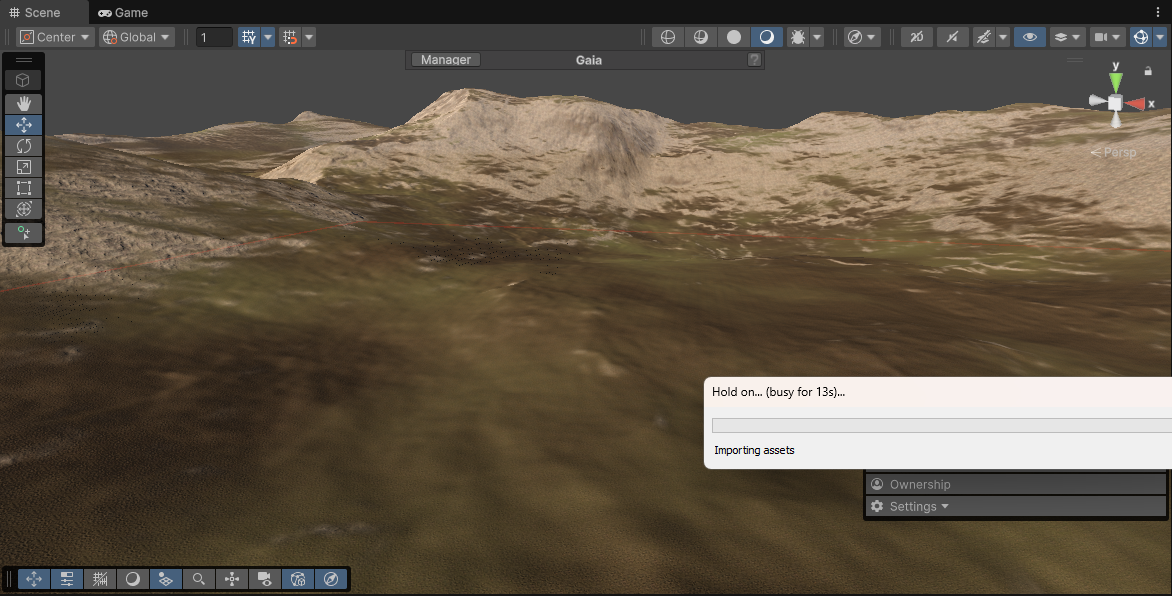

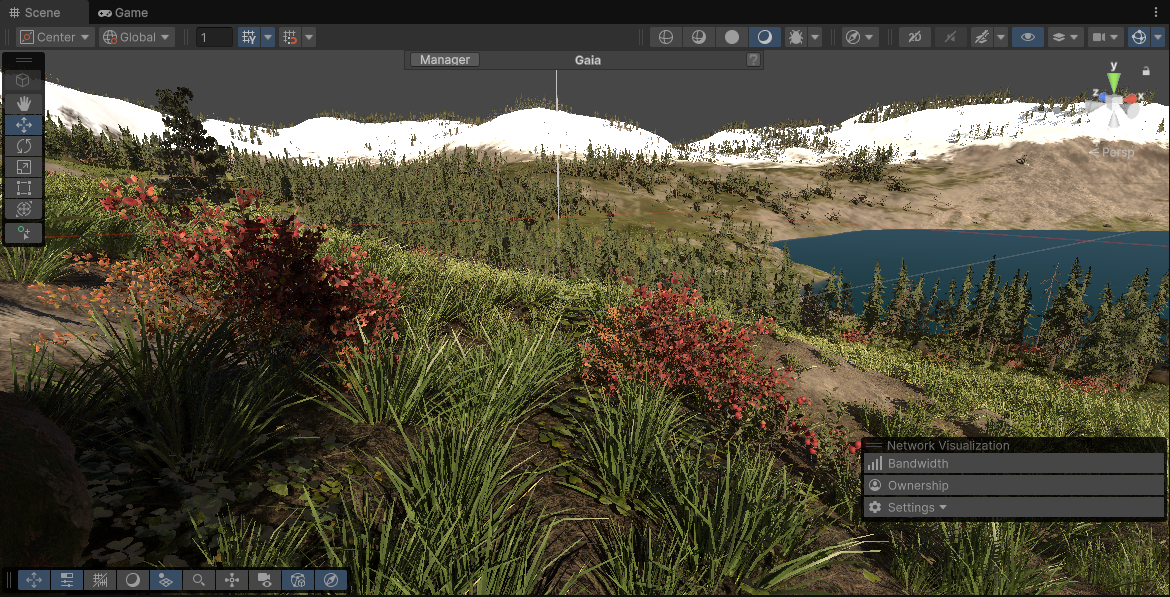

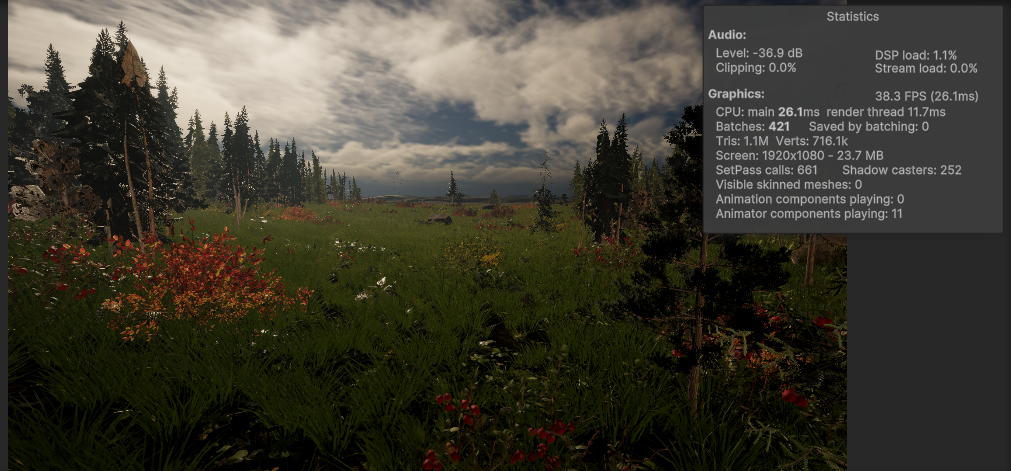

Ecological Data Conversion: Implemented a data conversion workflow to map vegetation generation rules from Gaia Biomes to Storm FeatureSets, enabling automated, community-based vegetation distribution.

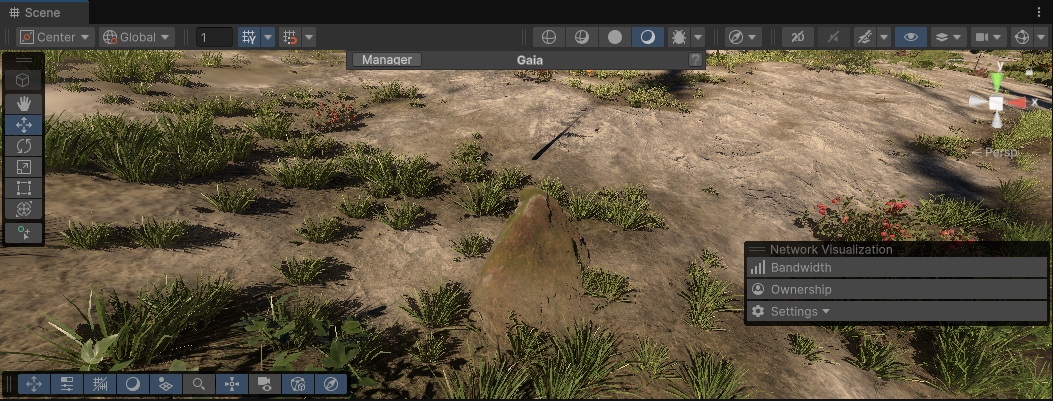

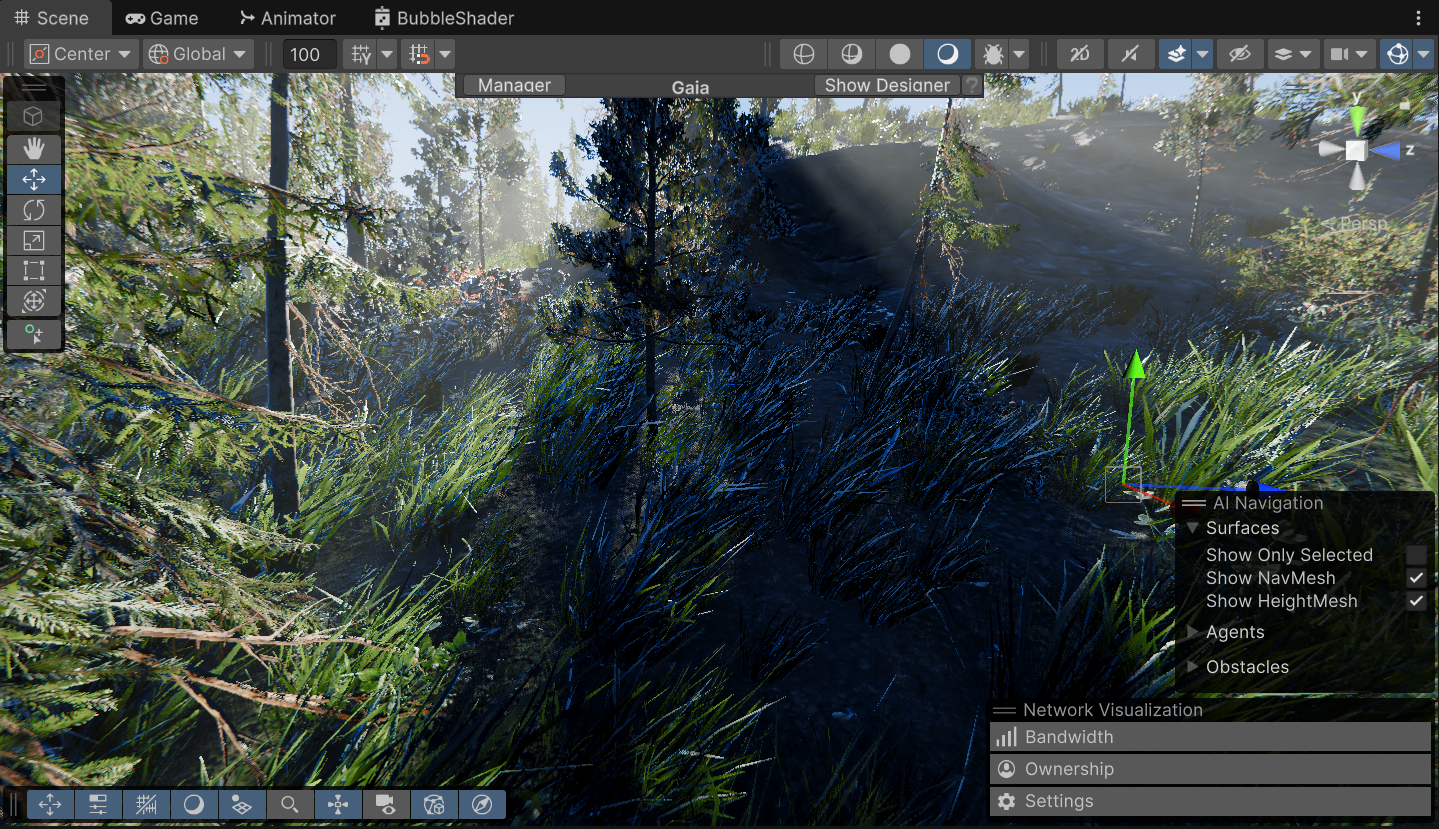

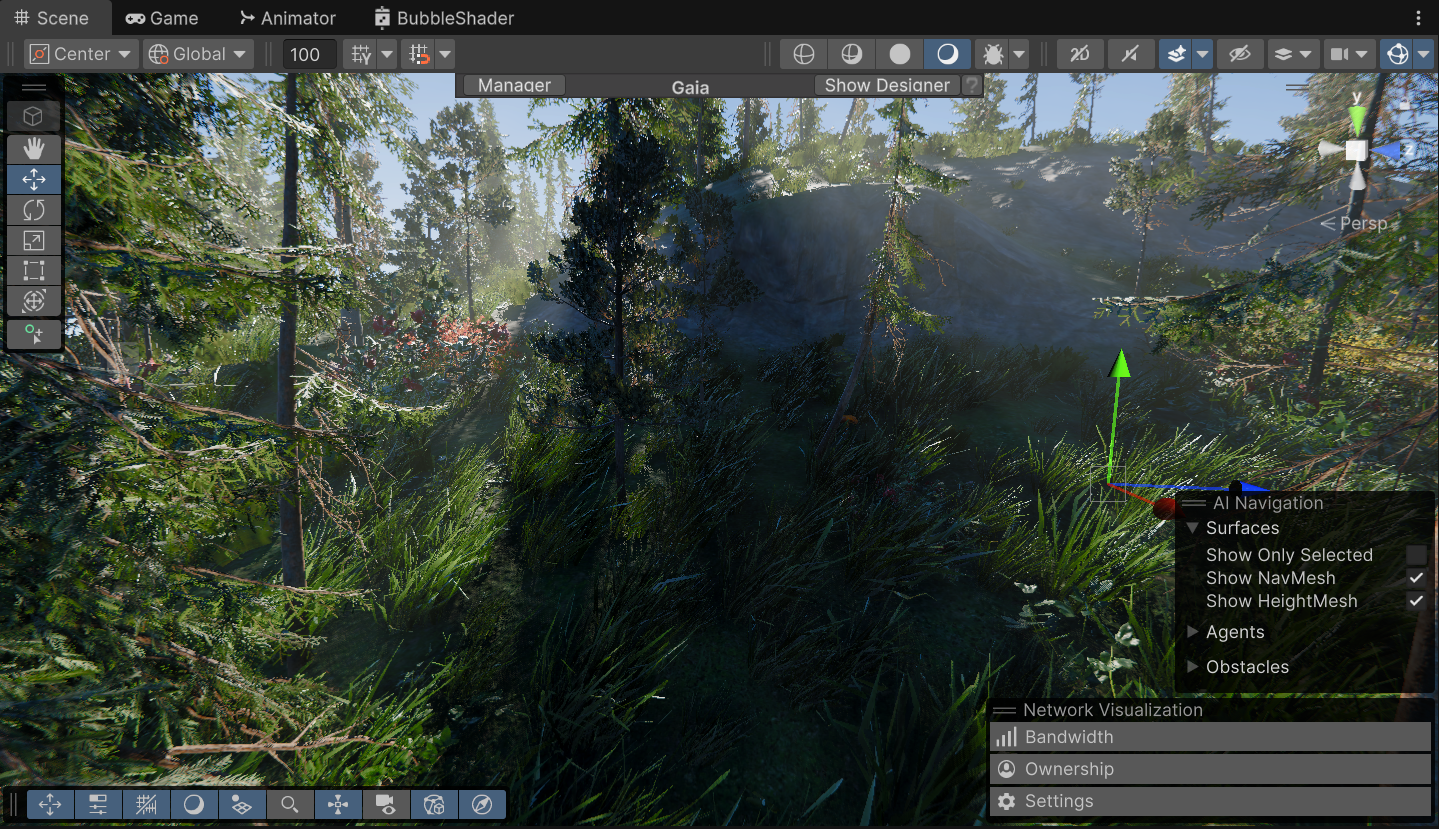

Ecological Data After Conversion

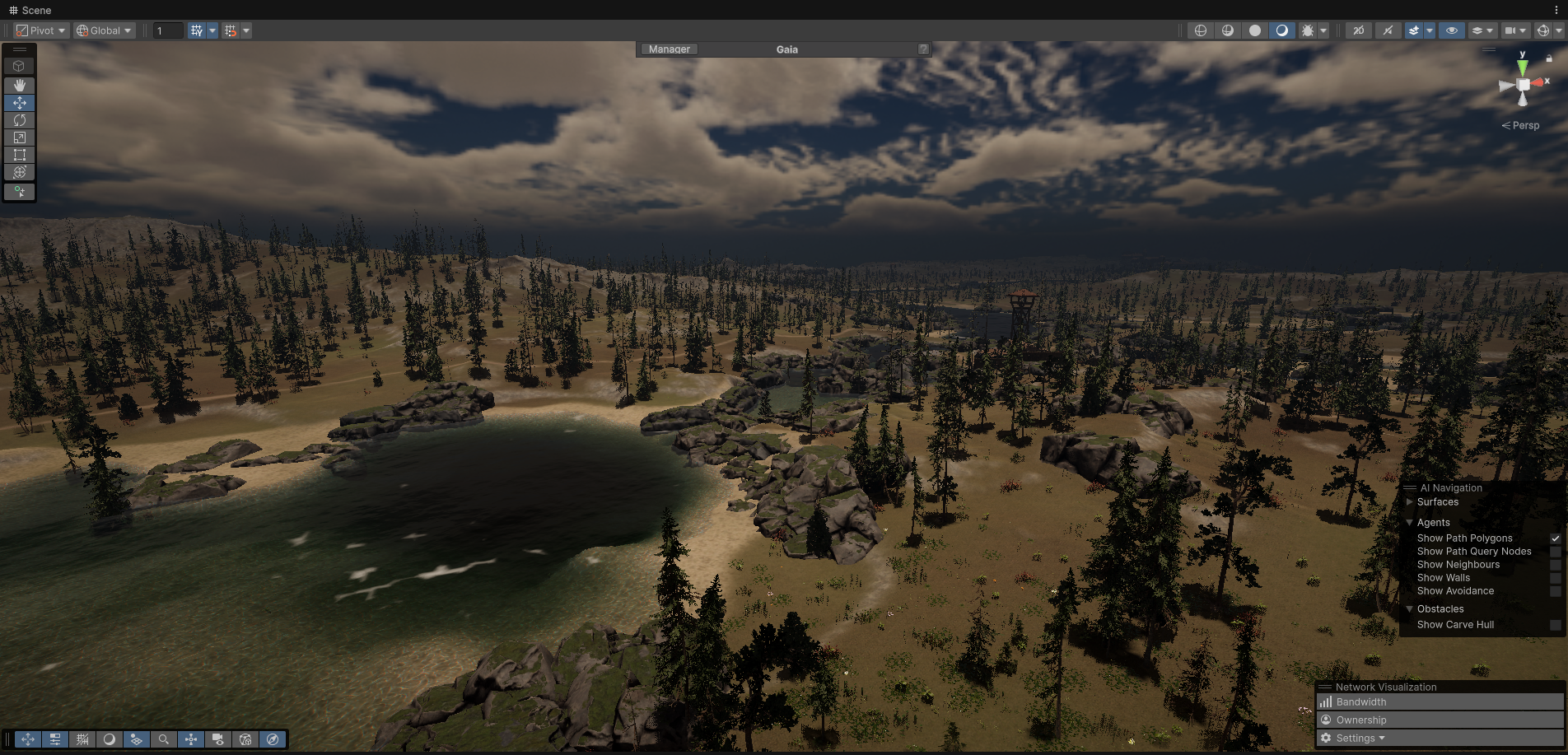

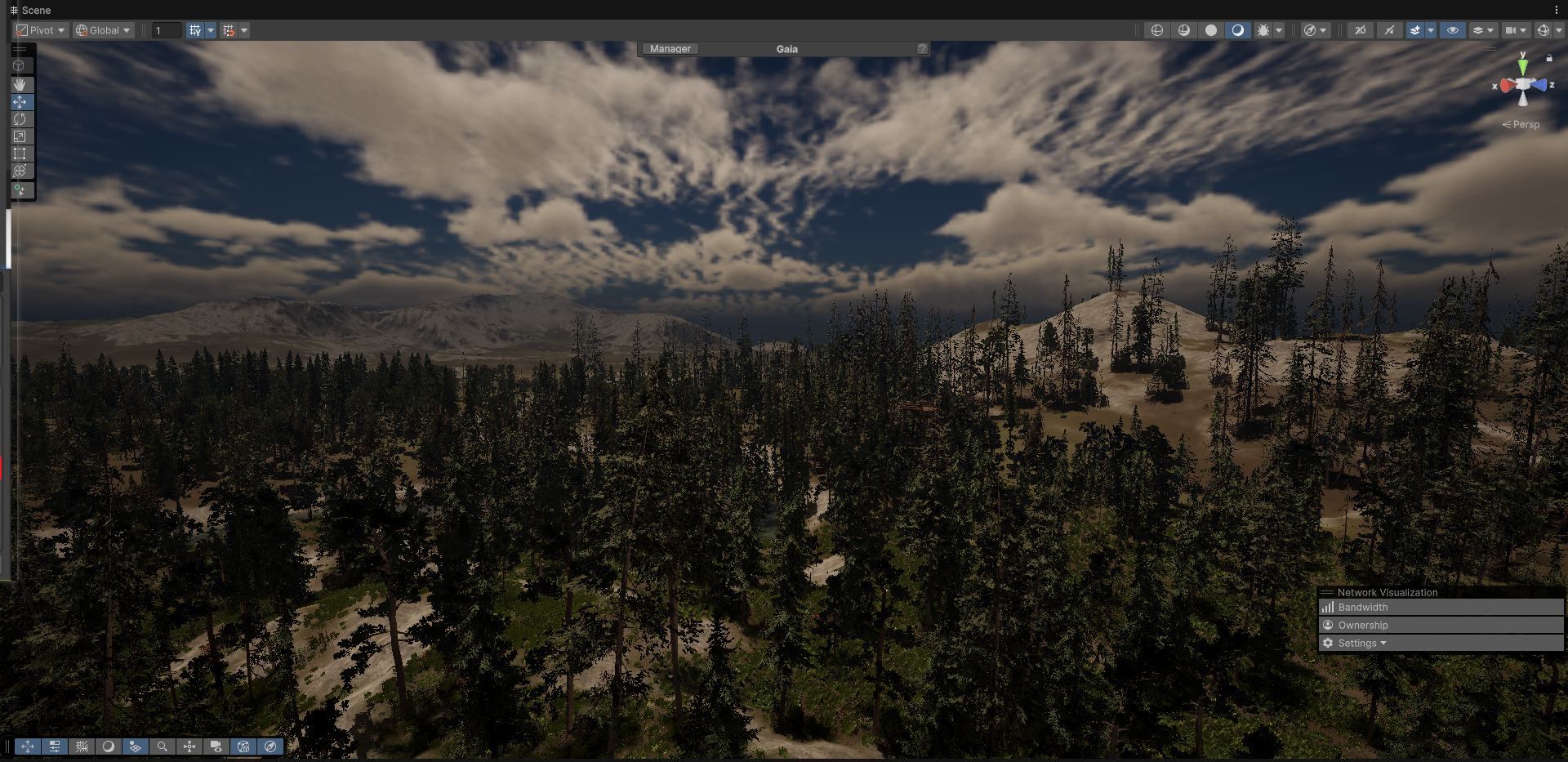

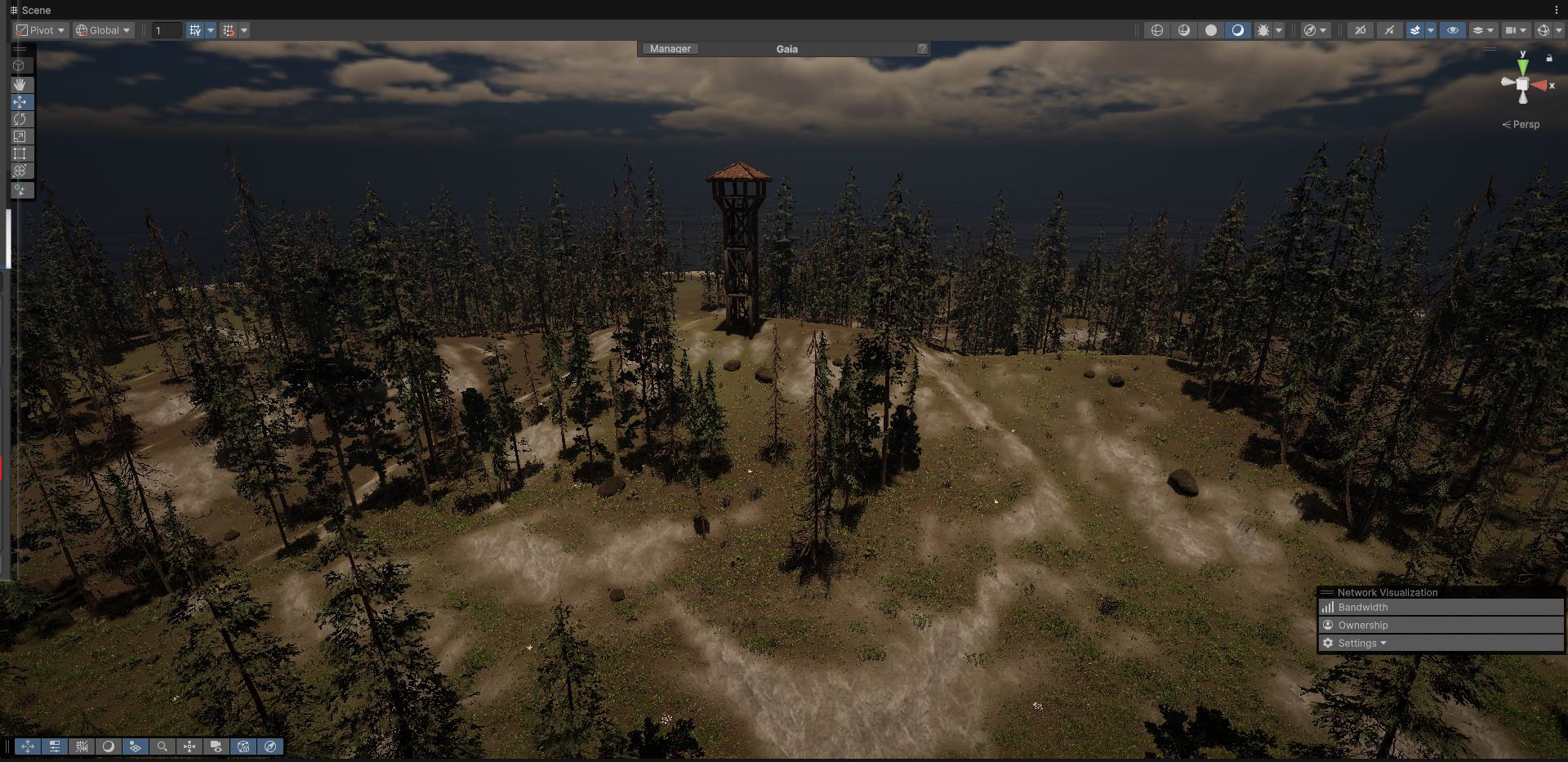

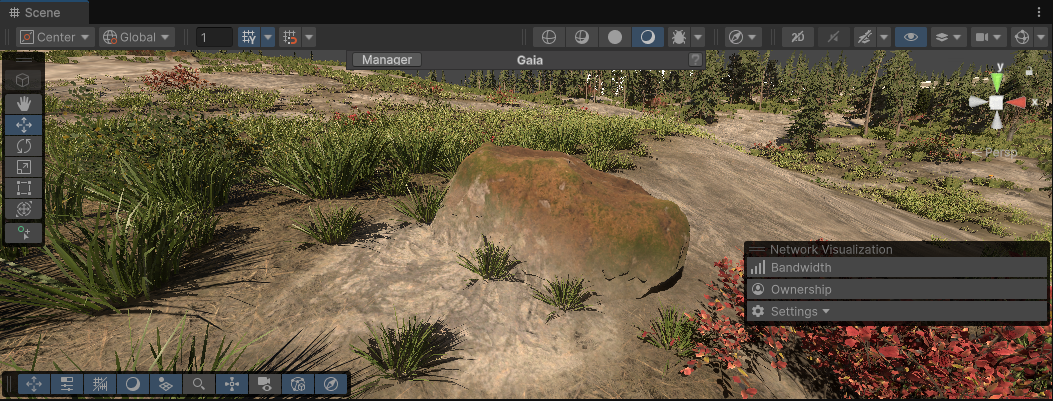

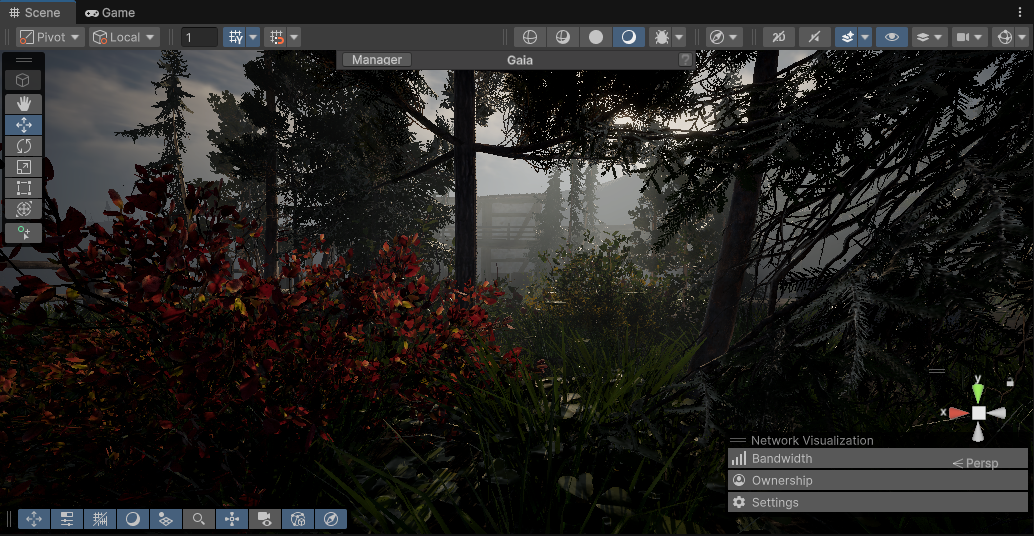

Hybrid Modification Strategy: Formulated a “Procedural Generation + Manual Tuning” hybrid workflow. While leveraging procedural generation to ensure ecological diversity and density, I coordinated with level designers to clear or adjust vegetation in specific areas based on gameplay requirements (e.g., pathways, combat zones, monster spawn points).

Hybrid Modification: Gameplay Area Clearing

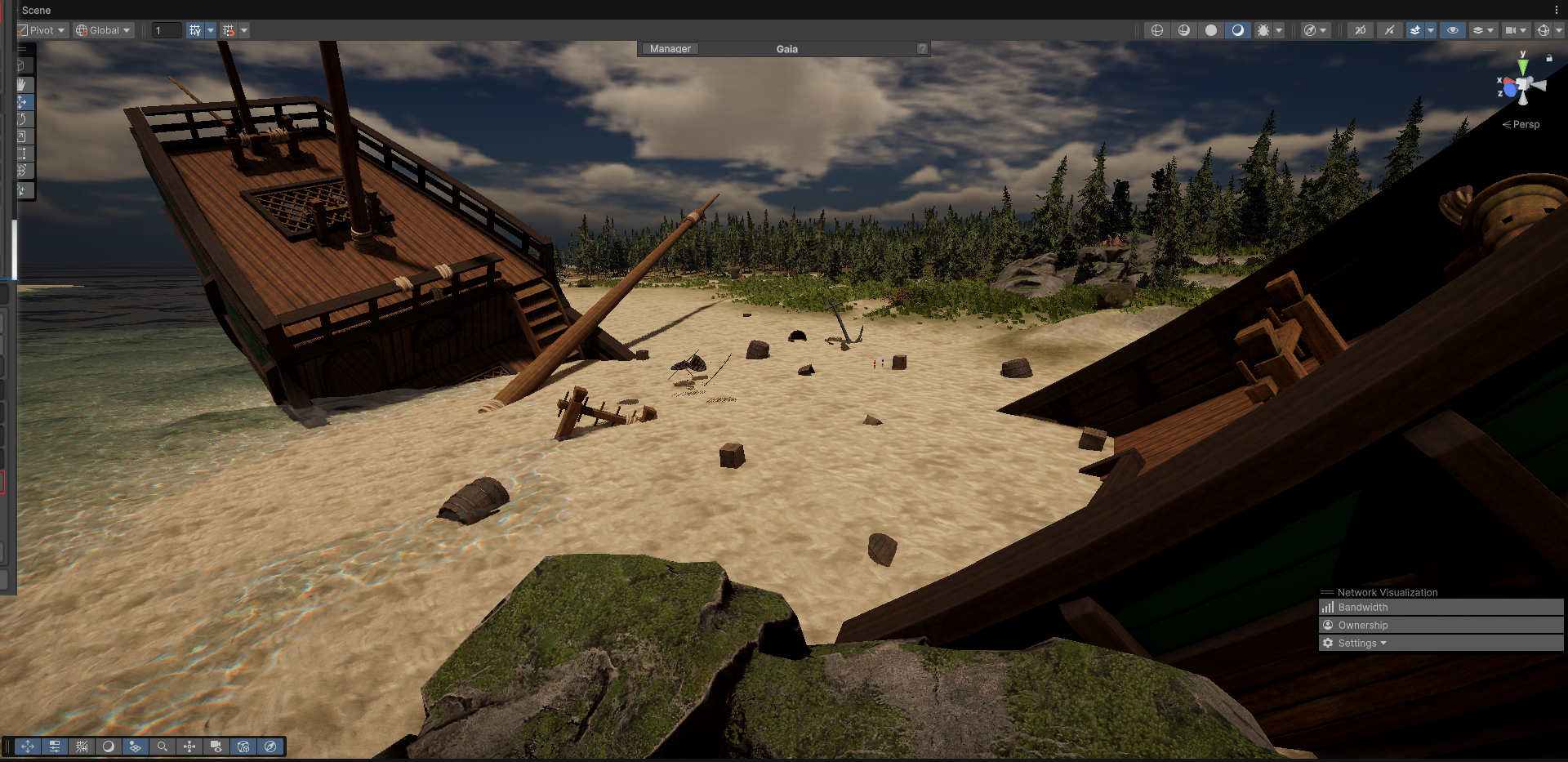

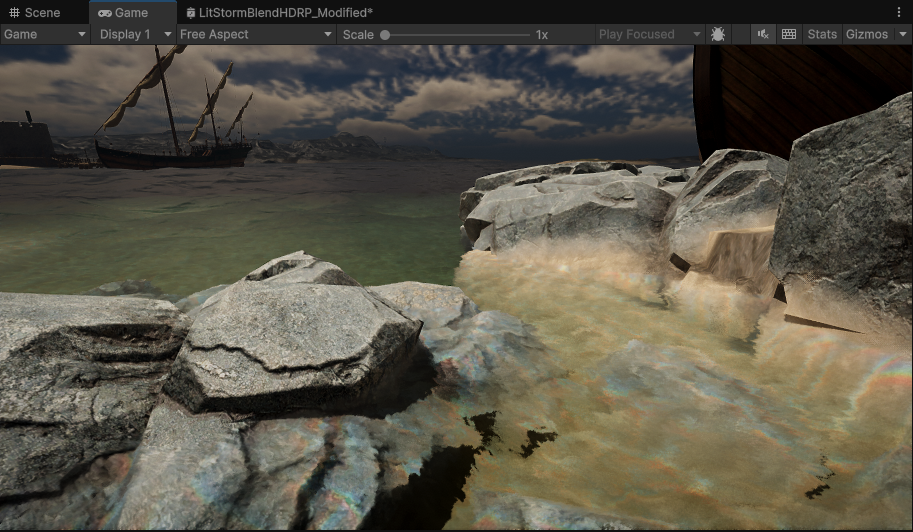

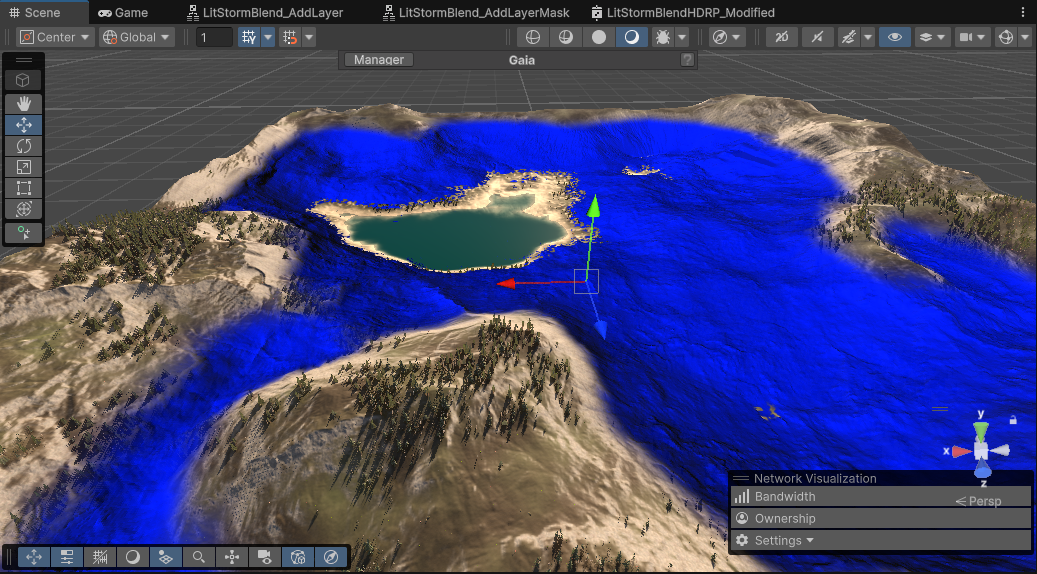

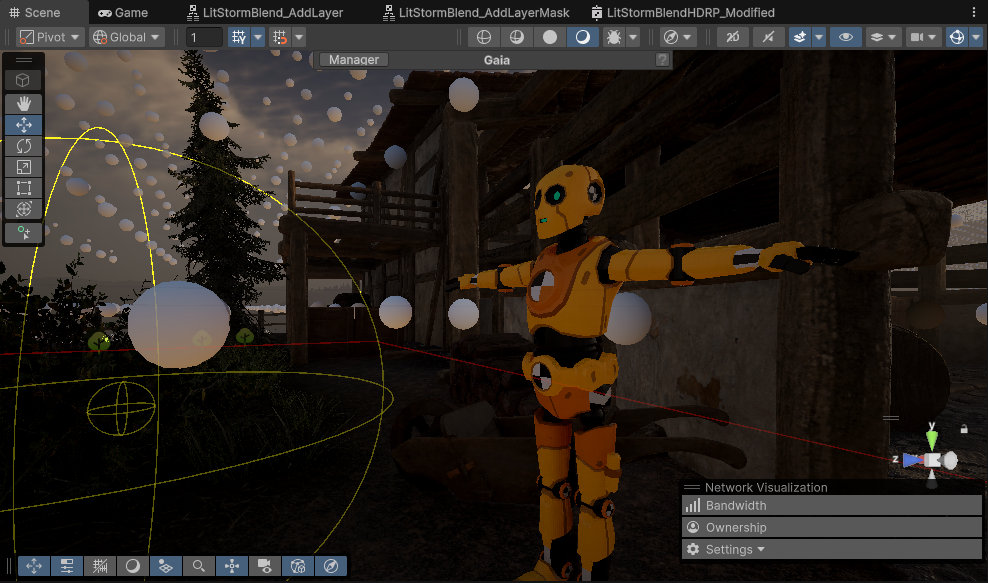

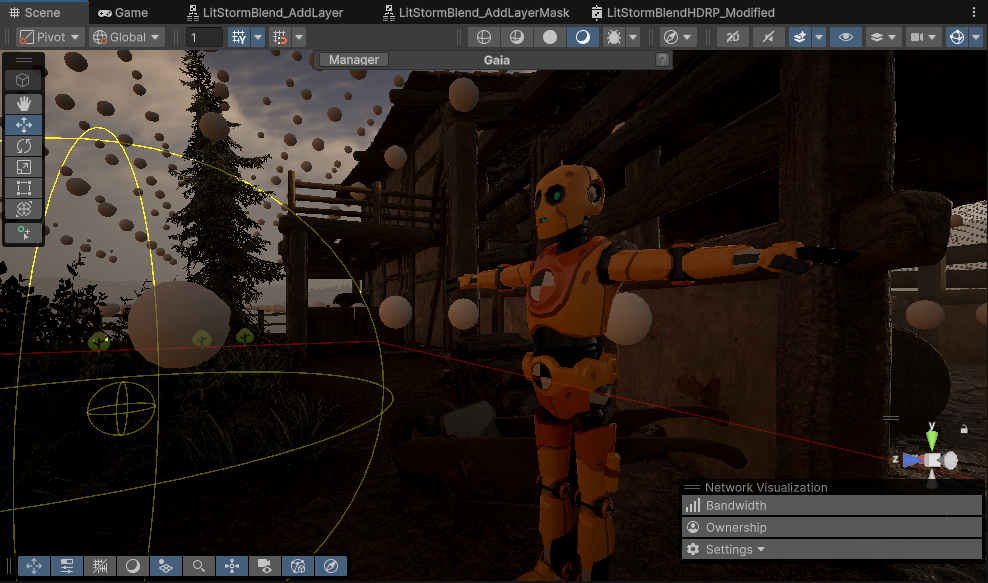

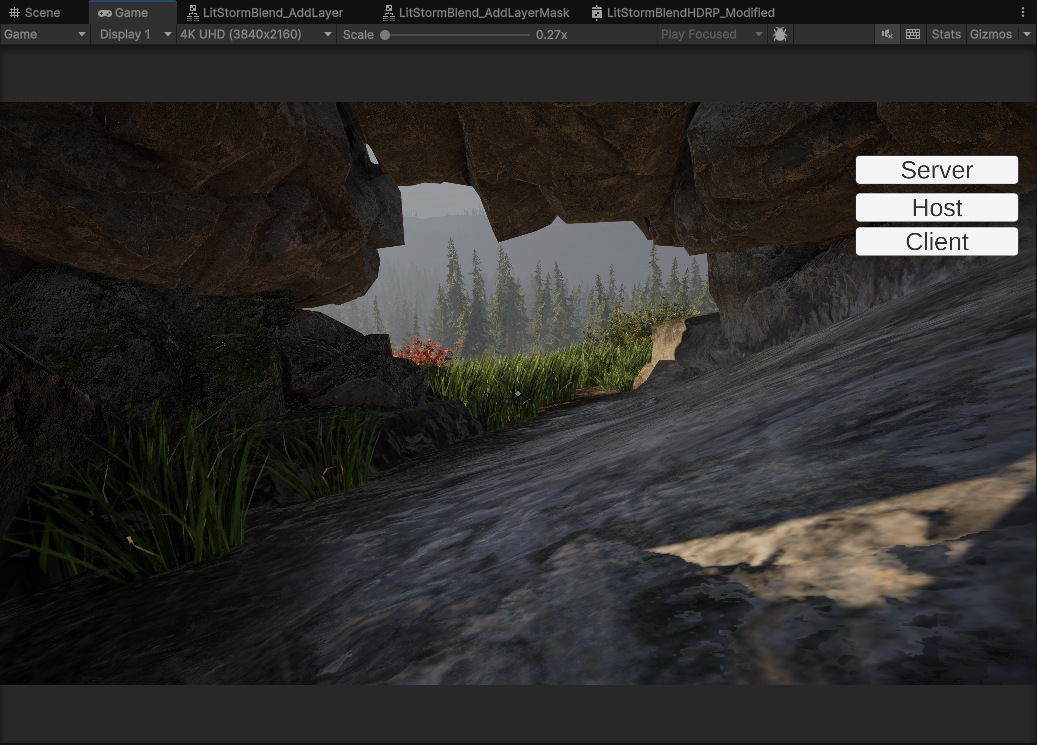

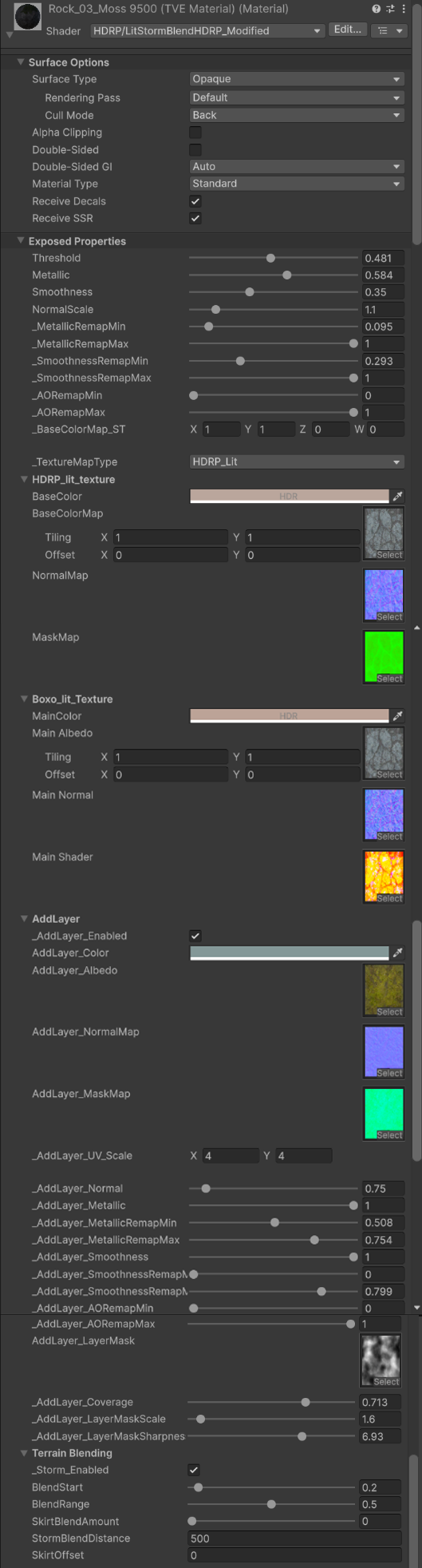

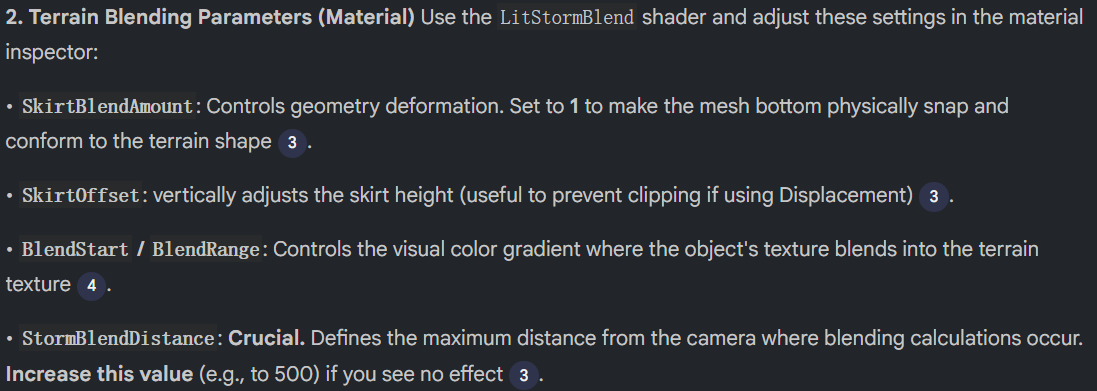

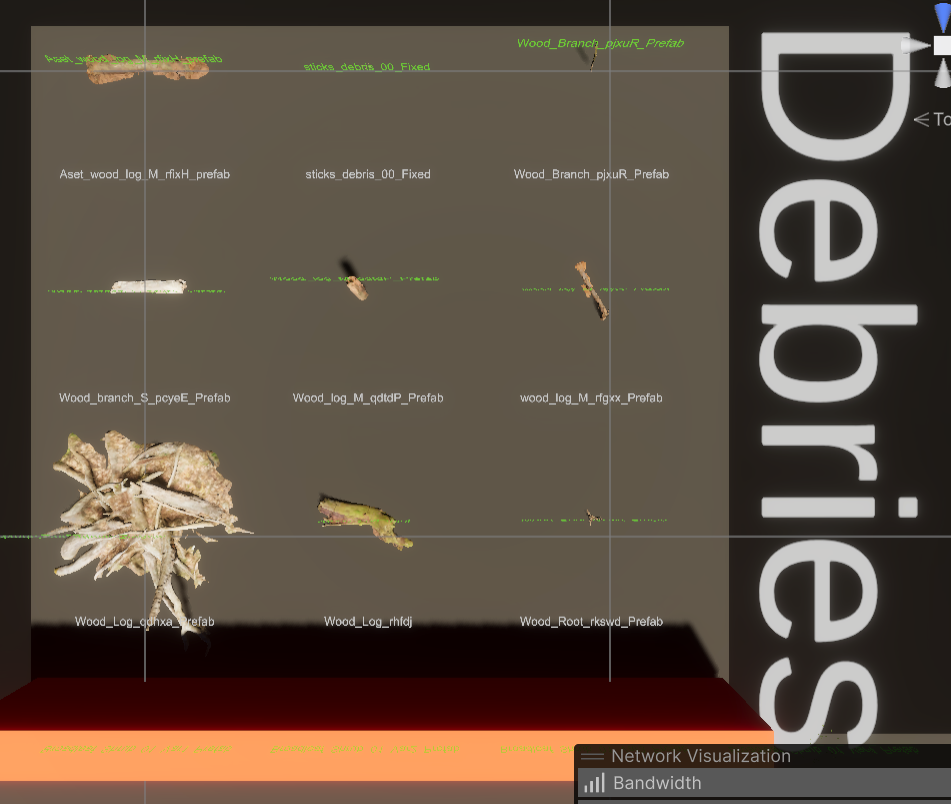

2. Asset Integration & Shader Compatibility (Early July)

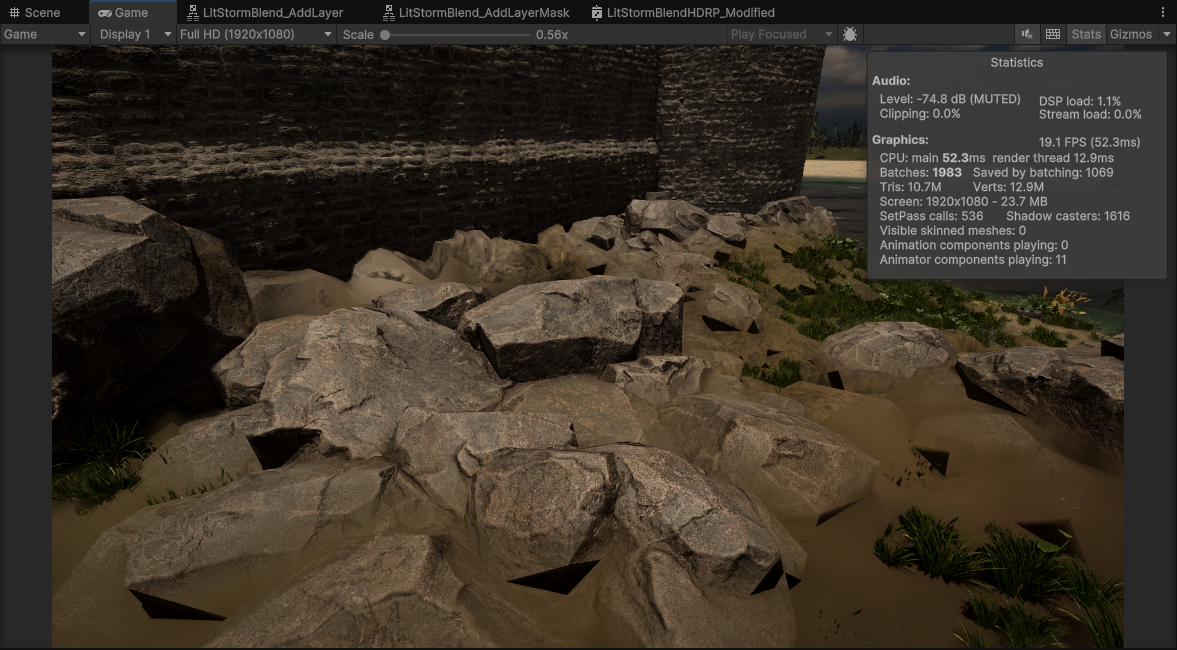

Core Contribution: Resolved Shader compatibility issues between the third-party plugin (Storm) and the project’s existing legacy assets (Boxophobic/HDRP), ensuring visual consistency.

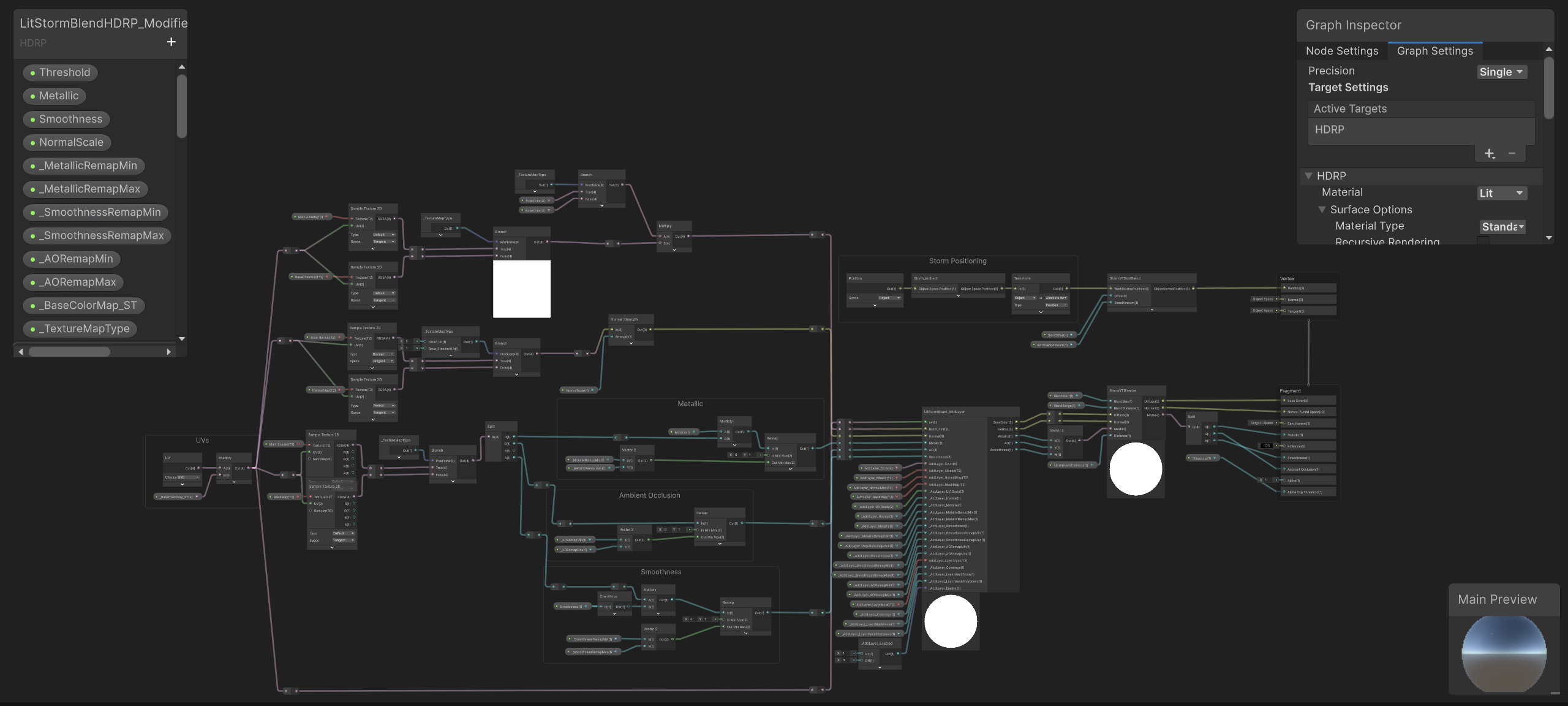

Shader Merging (HLSL/Graph): Manually merged Storm’s Texture-Based Blending logic into the project’s original Boxophobic and HDRP/Lit Shaders. This enabled a “one-click switch” compatibility, allowing art assets to seamlessly blend with terrain textures while preserving their original high-fidelity details.

Shader Merging & Texture Blending

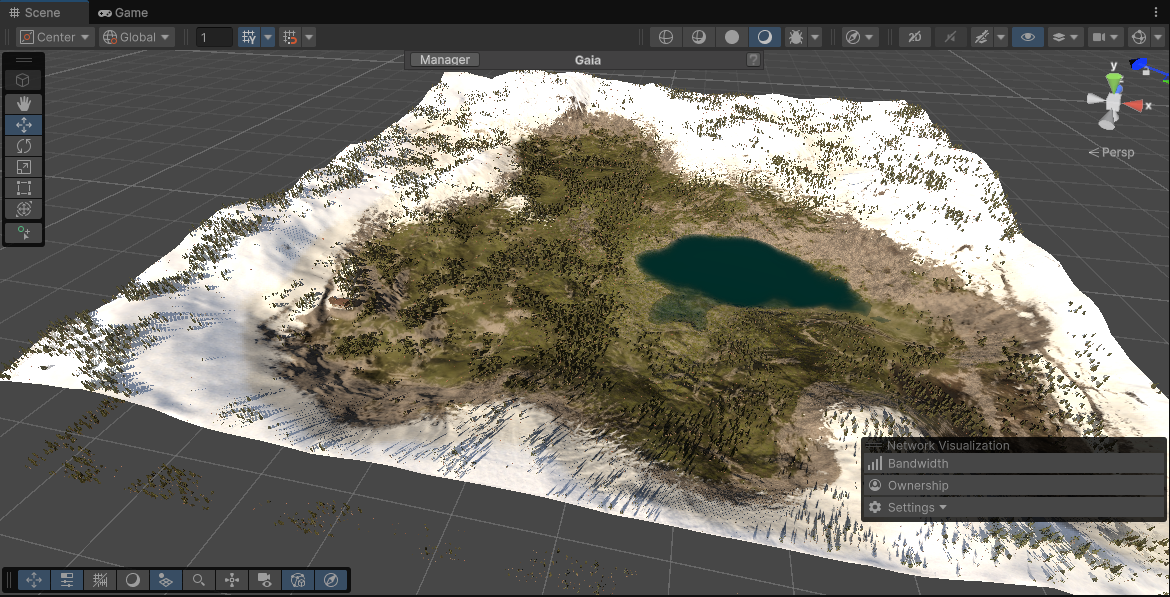

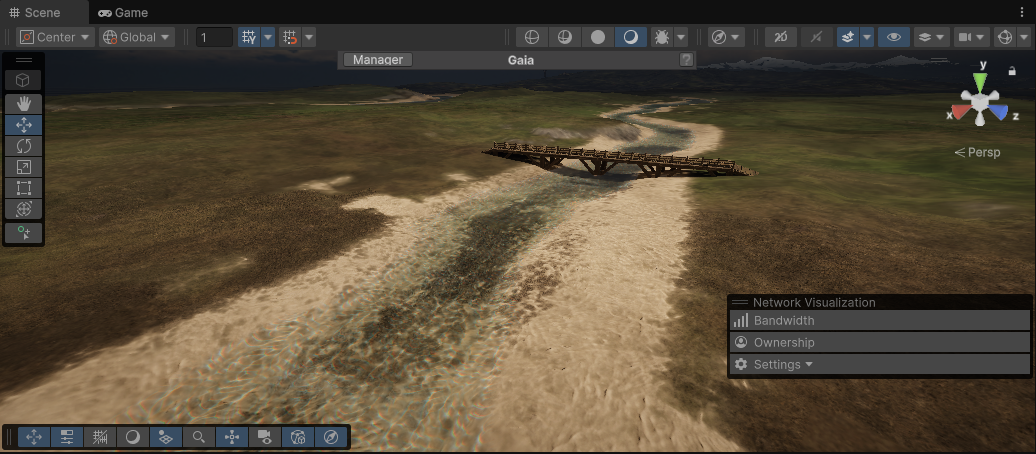

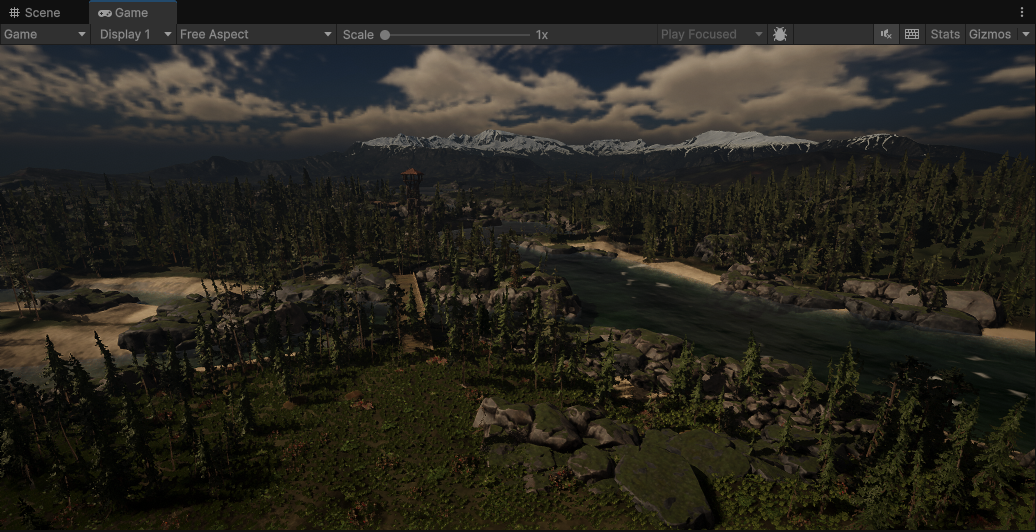

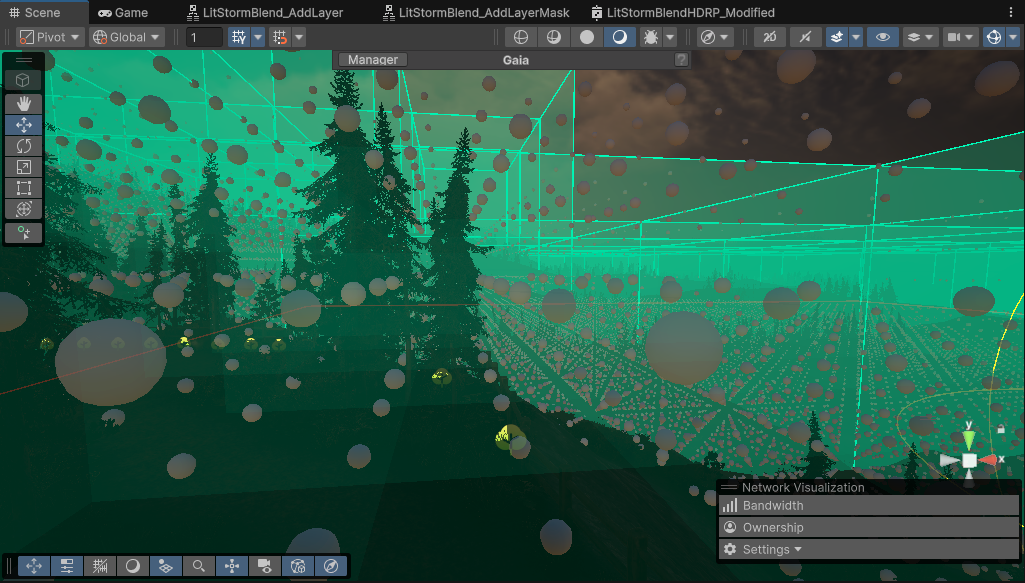

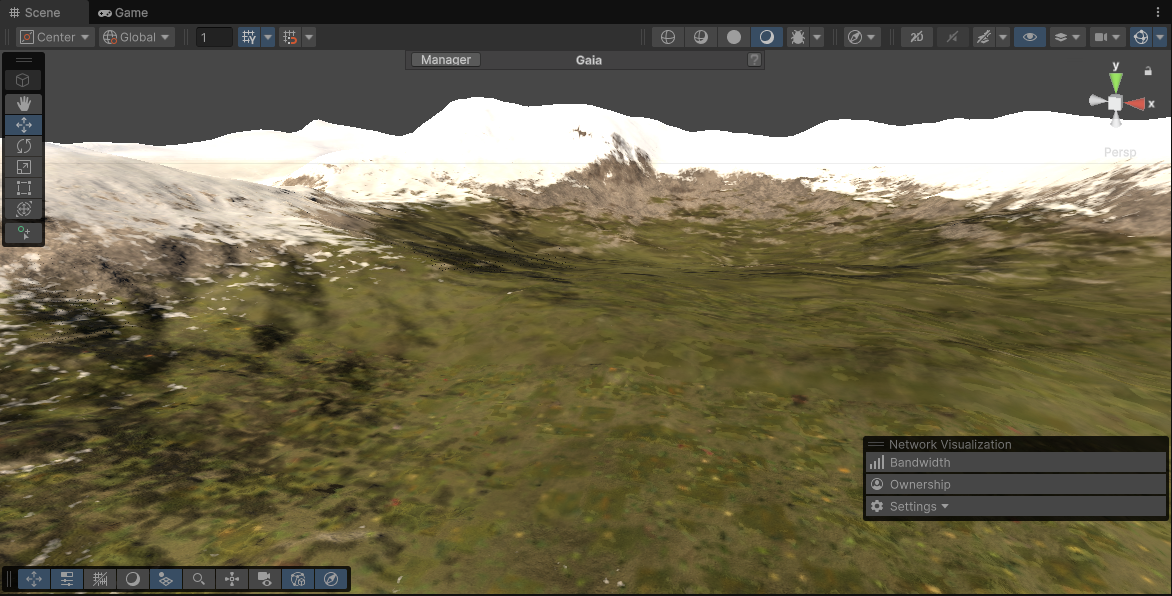

Texture-Based LOD (Vegetation Fallback Strategy):

- The Problem: Distant vegetation (grass/trees) is culled due to LOD strategies, revealing the bare dirt texture of the terrain and causing obvious “visual popping” or bald spots.

- The Clever Solution: Configured a Texture-Based LOD system using Top-down Capture. By baking the vegetation’s color distribution and mapping it to the terrain’s Global Color Map, the terrain texture dynamically inherits the vegetation’s color.

- Result: When physical vegetation meshes are culled, the terrain color seamlessly takes over the visual representation (e.g., dirt turning green to mimic grass), creating the illusion of “infinite view distance” with minimal rendering cost.

Texture-Based LOD Strategy

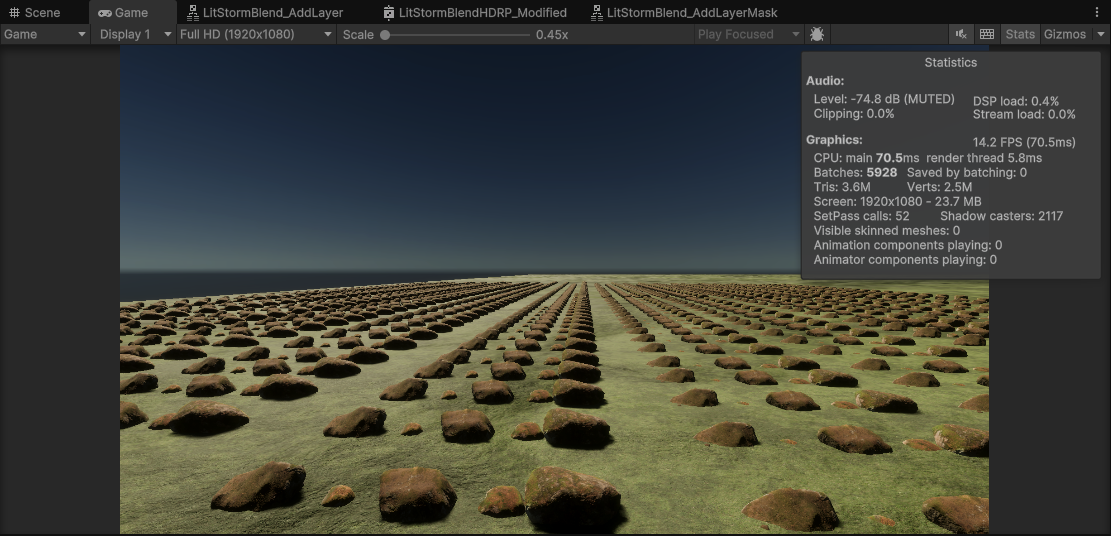

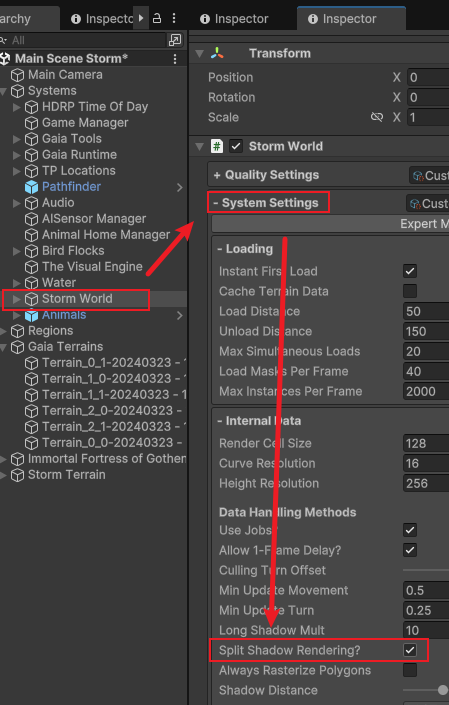

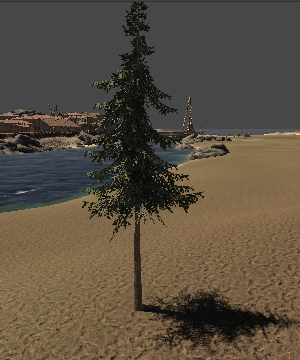

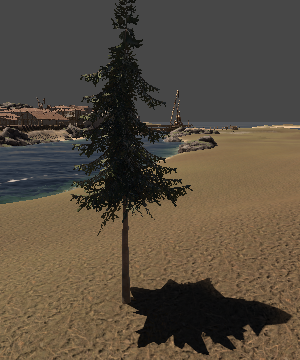

3. Performance Optimization: Shadows (Late July)

Split Shadow Rendering: Enabled Storm’s Split Shadow Rendering strategy.

- Technical Rationale: Resolved batching difficulties caused by the Shadow Pass and Main Pass sharing high-poly LODs in the default pipeline.

- Action: Configured vegetation to use ultra-low-poly proxies in the Shadow Pass, significantly boosting performance without compromising shadow silhouettes.

Geometry Comparison: High Poly Main vs. Low Poly Shadow Caster

Performance Analysis: Shadow Pass Optimization

4. AI Analysis & Gamescom (August - September)

AI Tuning: Optimized sensory detection parameters for pack animals (Wolves/Deer) to balance AI behavioral complexity with performance.

Gamescom Exhibition: Assisted the team in exhibiting the demo at Gamescom 2025. I personally helped construct the team booth and sub-leased space to other indie developers, networking with peers including the developers of Holdfast (Anvil Game Studios).

Team Insomnia at Gamescom Booth

5. Lighting Architecture Overhaul (October)

Benchmark & Gap Analysis

Initial Attempt: Early in the project, I attempted a “fully-baked” approach—a hybrid pipeline using Baked Lightmaps + APV.

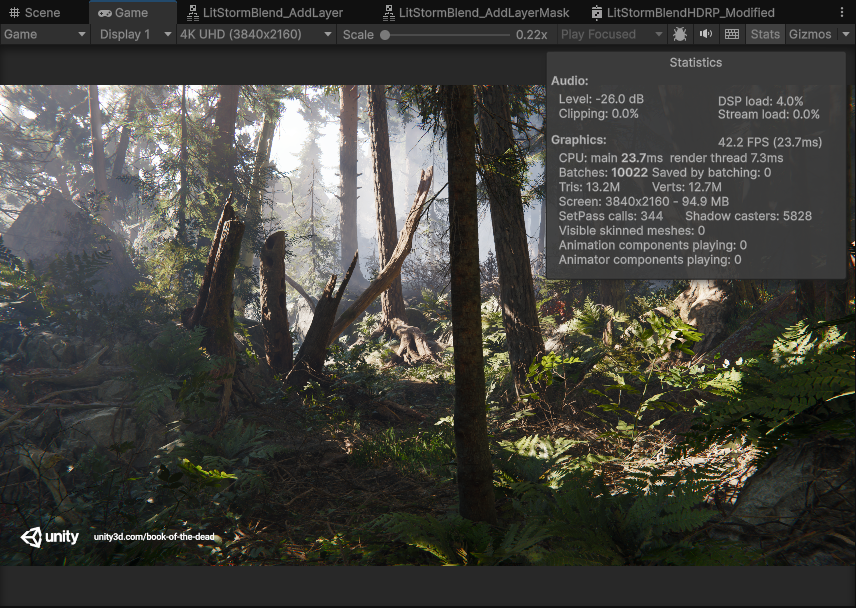

Empirical Evidence: Even with Lightmaps, the visual fidelity fell far short of the Unity official demo Book of the Dead (BotD).

Benchmark Target: Book of the Dead

Initial Attempt: Spriggan (Flat lighting, lack of depth)

Root Cause Analysis: Lightmaps were not the core solution to the visual gap. BotD’s visual impact comes from a “Trinity” of spatial construction and refined material response, which were missing in my initial attempt:

- Occlusion: Lacked strong micro-occlusion; objects felt like they were “floating” on the terrain.

- Reflection: Lacked a “deliberately designed” reflection volume placement, failing to render the “oily/waxy” specular quality of leaves and wet rocks seen in BotD.

- Volumetric Fog: Fog failed to carry lighting information, unable to extend light/shadow relationships from “surface” to “volume.”

- Material Response (SSS): Vegetation materials looked too “plastic.”

Quick Fix (SSS):

Addressed the SSS deficiency by fine-tuning the Shader (Diffusion Profile & Normal Intensity), quickly resolving the material look.

However, to address the core occlusion and dynamic lighting issues, I decided to abandon Lightmaps and build a new fully dynamic “Combo” architecture.

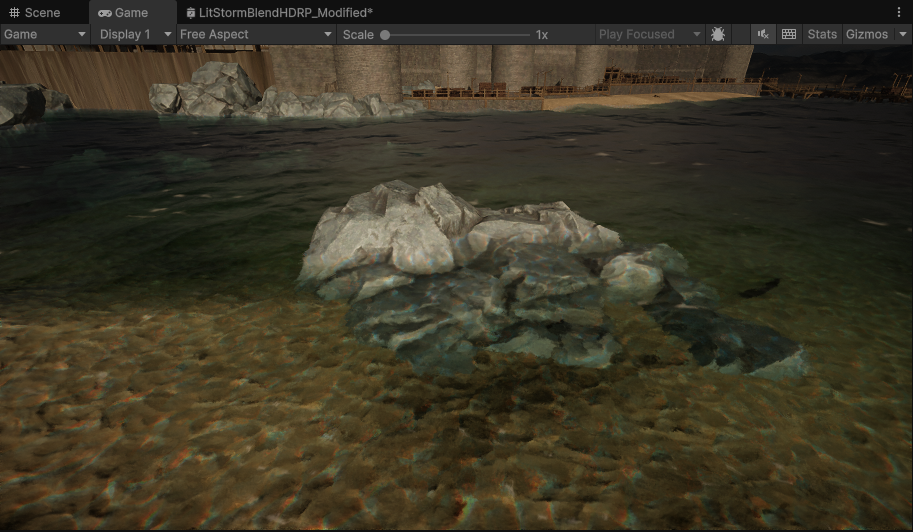

Dynamic Lighting Architecture: SSGI & APV Synergy

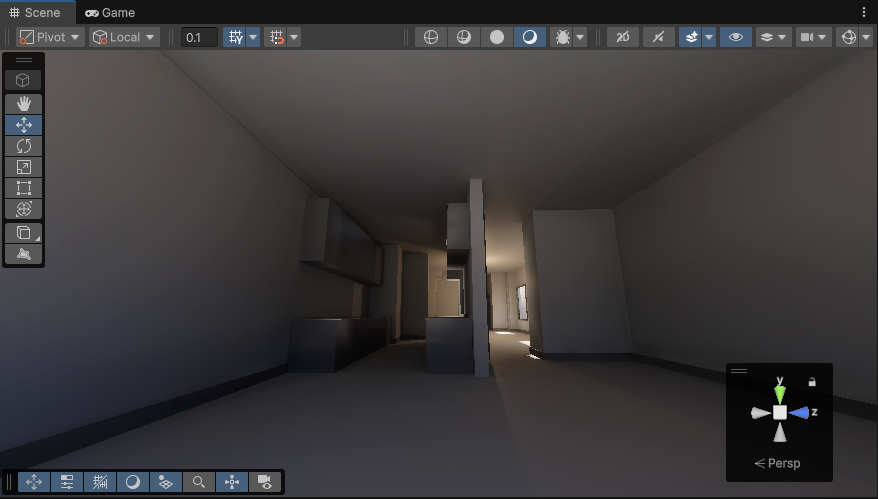

Challenge (Light Leaking): After switching to Adaptive Probe Volumes (APV) for fully dynamic lighting, pure APV solutions in indoor scenes suffered from low-frequency light leaking and noise due to insufficient sampling precision.

Artifacts: APV Low-frequency Light Leaking

Strategy (Dual Complementary): I introduced SSGI as a high-frequency detail layer. Utilizing SSGI’s screen-space ray tracing to smooth and blend the APV’s low-frequency data effectively eliminated noise artifacts. Simultaneously, I leveraged Unity’s fallback mechanism to handle SSGI’s lack of off-screen information.

(Unity Doc): “Because Adaptive Probe Volumes can cover a whole scene, screen space effects can fall back to Light Probes to get lighting data from GameObjects that are off-screen or occluded.”

Result:

SSGI successfully smoothed APV sampling artifacts and enhanced micro-occlusion, while the pipeline seamlessly fell back to APV data when SSGI tracing missed or went off-screen.

Solution: SSGI Smoothing + APV Fallback

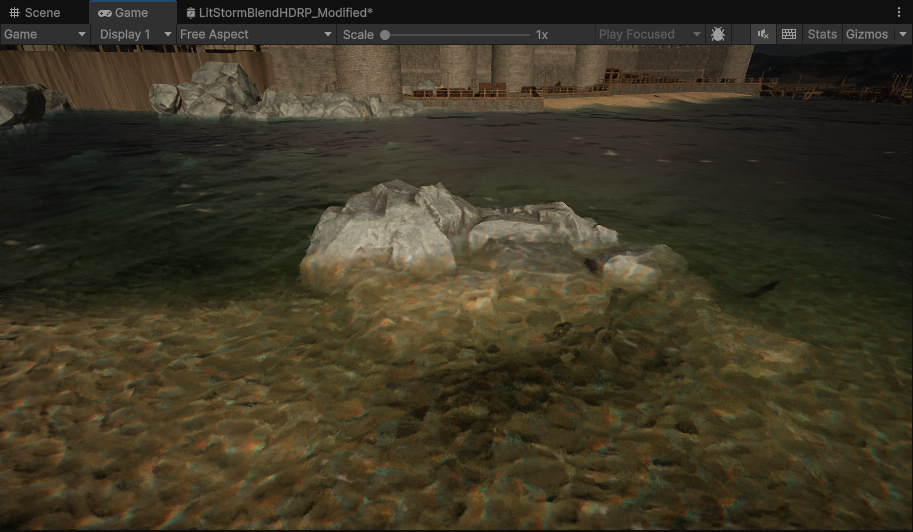

Reflection Strategy (“Subtraction” Logic)

Addressing the “lack of designed reflections,” I did not blindly increase probe density. Instead, I followed the documentation’s logic to “subtract”:

(Unity Doc): “Unity can use the data in Adaptive Probe Volumes to adjust lighting from Reflection Probes so it more closely matches the local environment…”

Optimization: I drastically reduced the number of Reflection Probes. By using only a Global Probe combined with APV correction data (Normalization/Occlusion), I achieved accurate reflection occlusion in the forest.

APV-Guided Reflection Occlusion

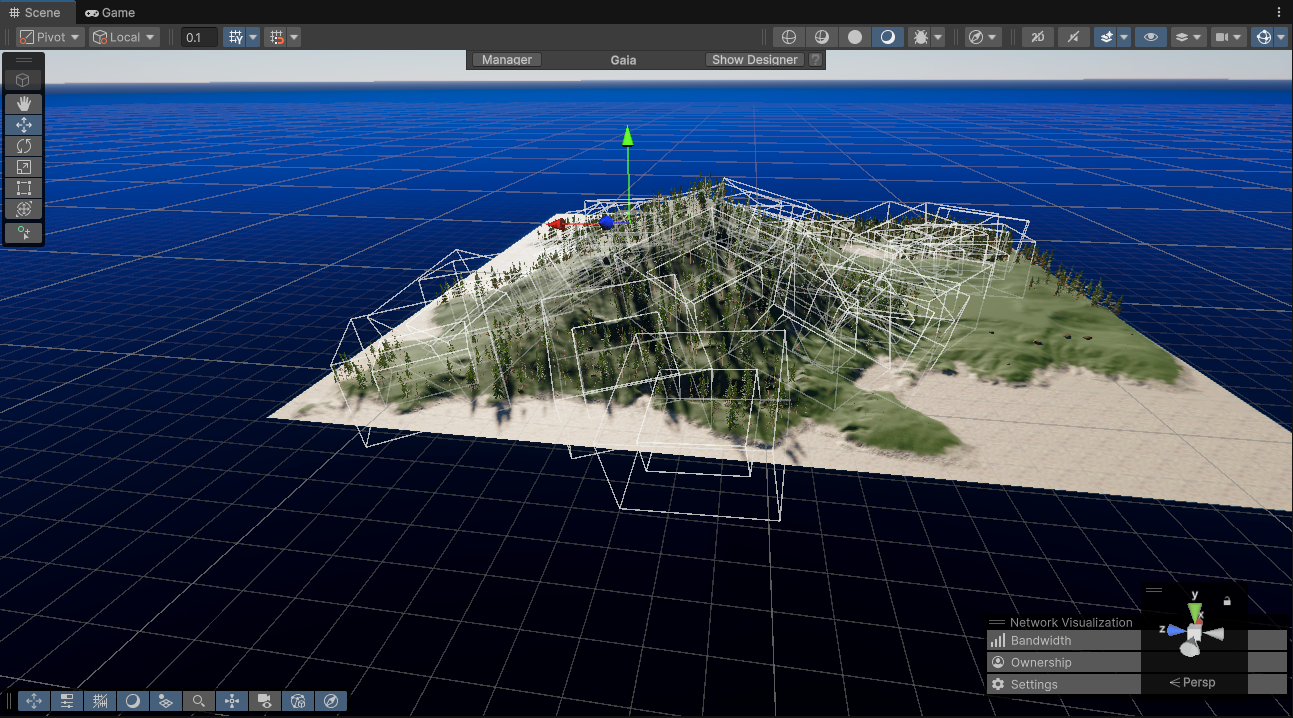

Atmospheric Synergy: High-Precision Volumetric Fog

To fix the “ineffective Volumetric Fog,” I used Gaia to identify locations near water sources, at terrain curve valleys, and within forests to procedurally spawn a large number of Local Fog Volumes.

Procedural Placement of Local Fog Volumes

(Unity Doc): “If you use volumetric fog, the per-pixel probe selection provides more accurate lighting for the variations in a fog mass.”

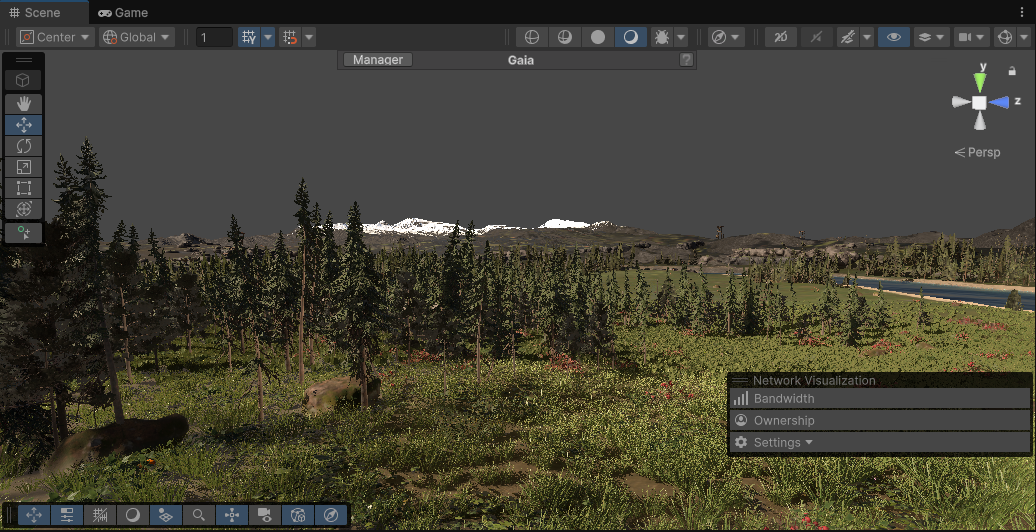

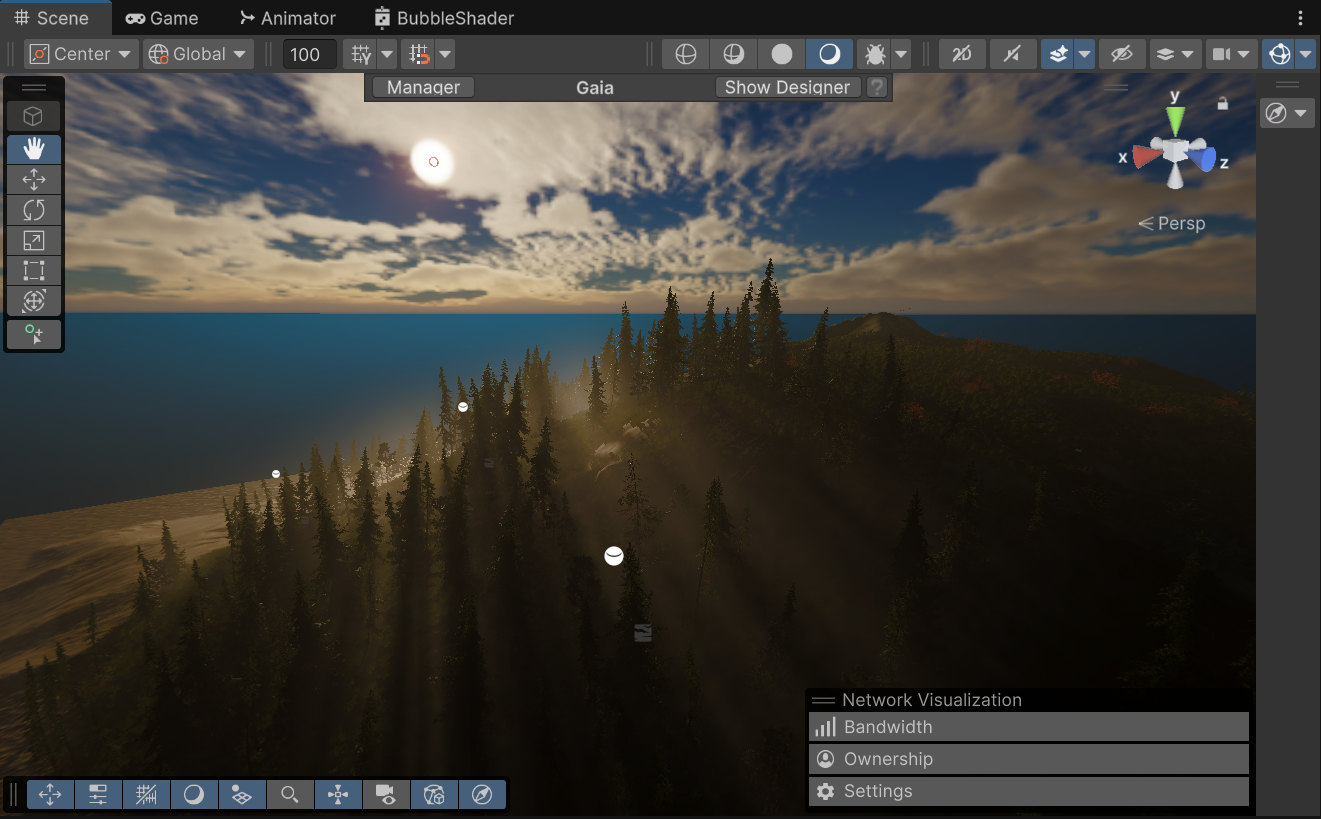

Result: When sunlight filters through leaves (Occlusion), illuminates physically-based fog (Fog), and creates highlights on wet surfaces (Reflection), the closed loop of APV + Reflection + Fog is formed, successfully replicating the visual benchmark of Book of the Dead.

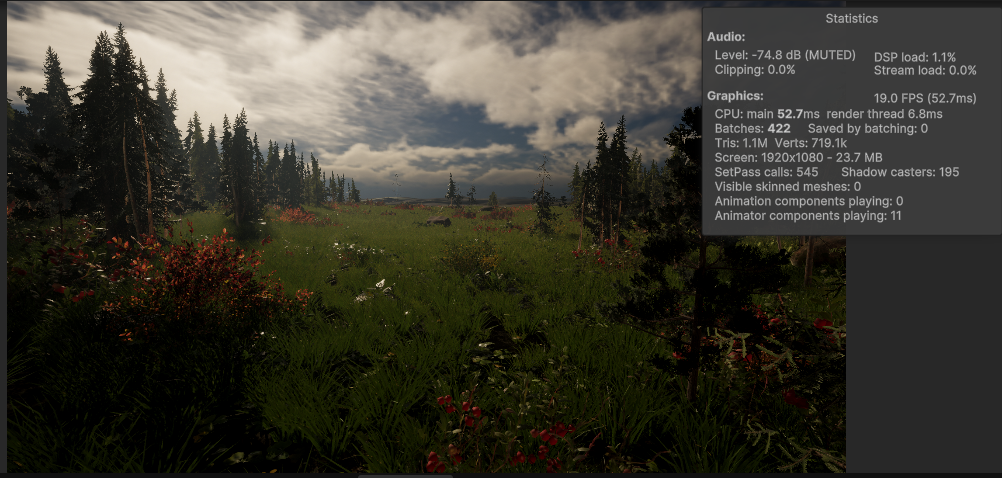

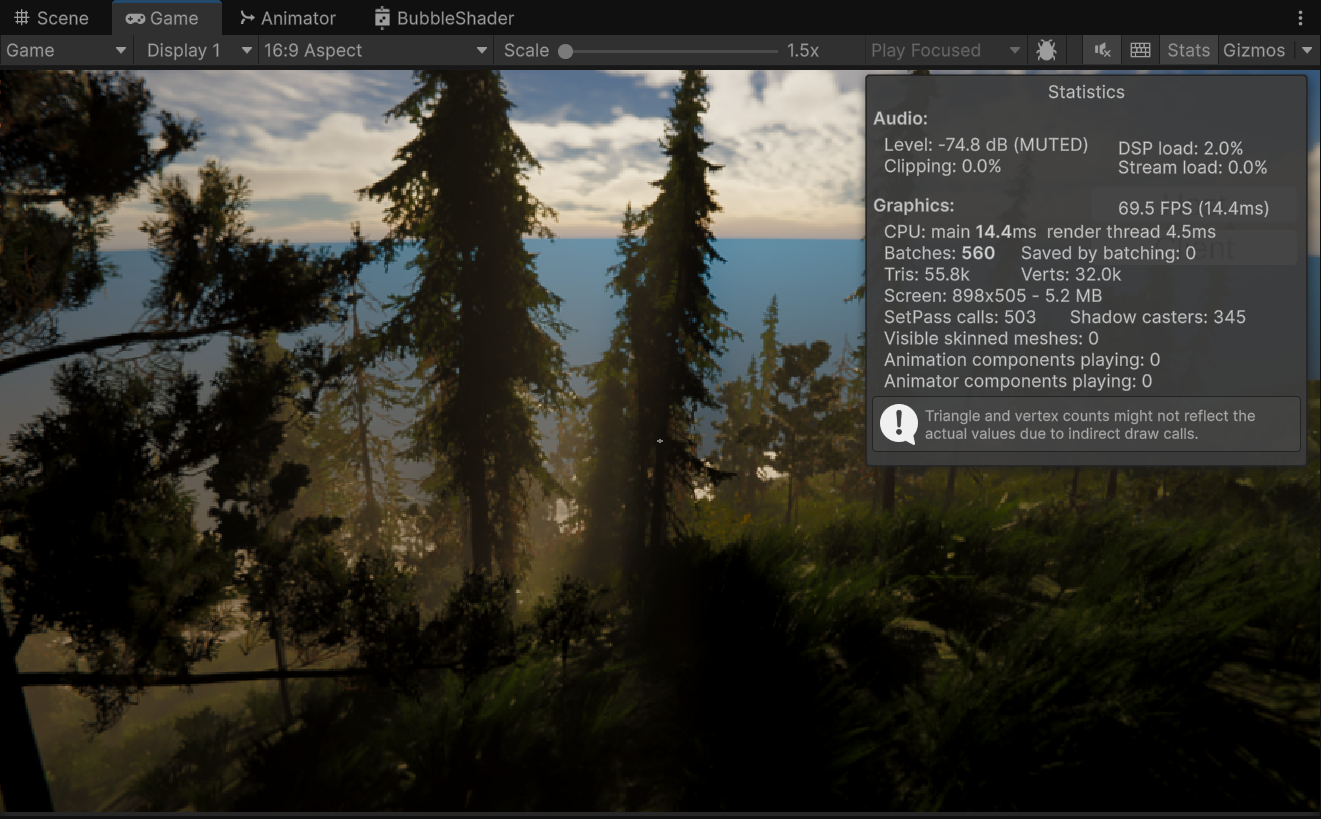

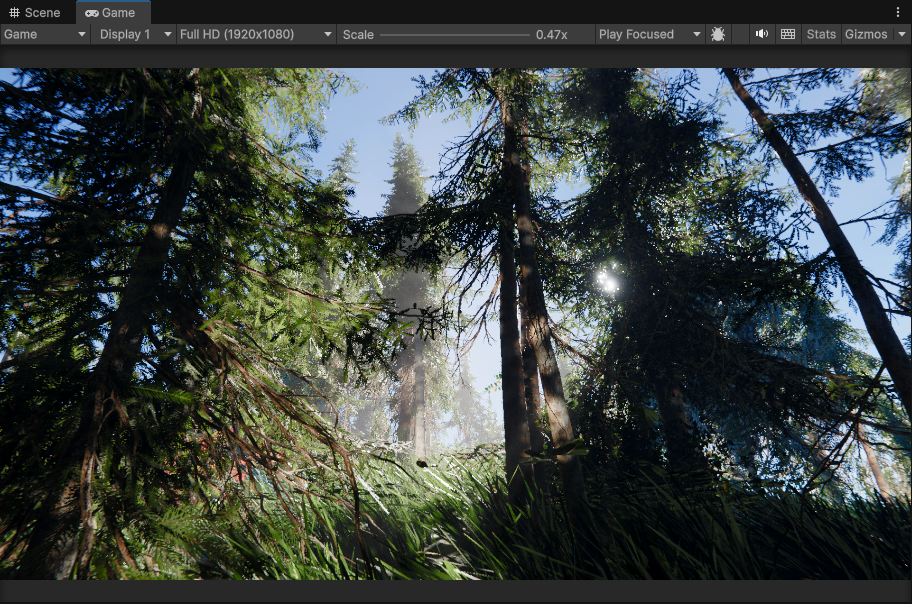

Final Look: Replicating the "Book of the Dead" Atmosphere